Build in Public: Week 2. How Do People Even Find Influencers?

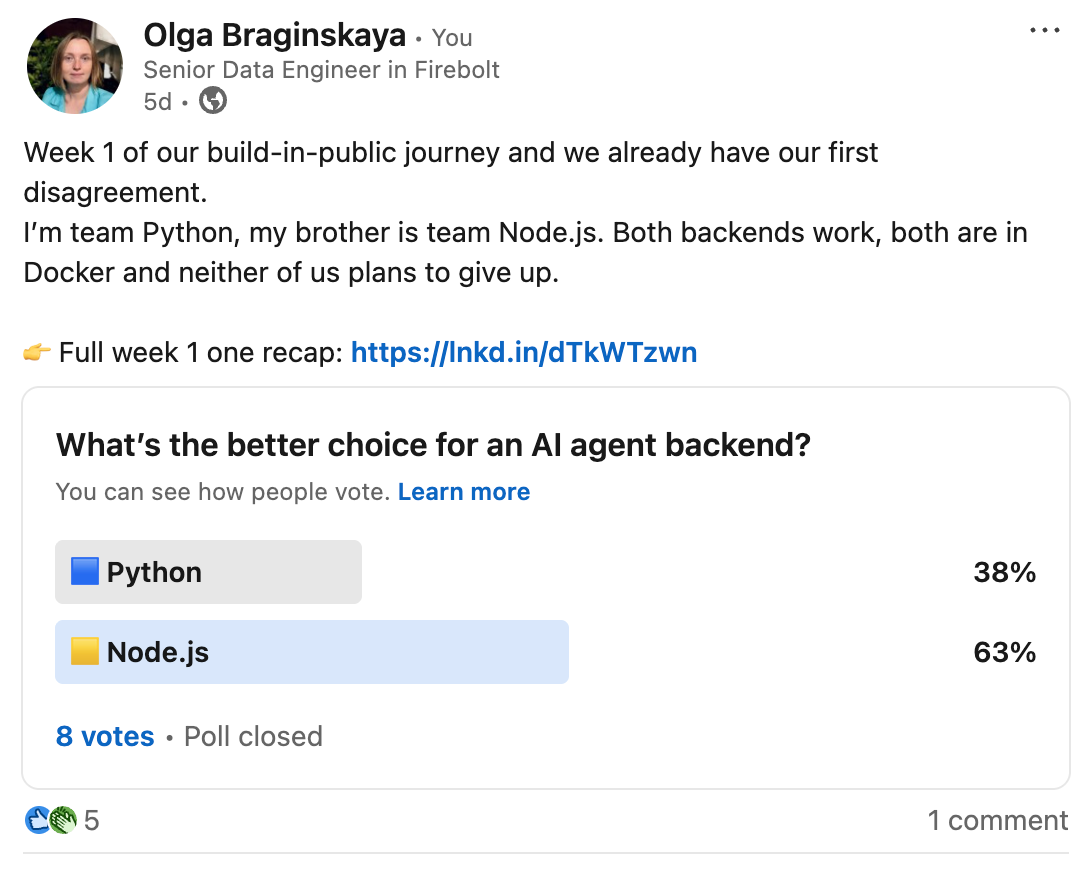

If you remember from the last update, we had our first real conflict: Node.js vs Python. Well, democracy has spoken. My "many" LinkedIn followers voted and the winner is Node.js. So this week we’re continuing with one backend, one direction and slightly fewer arguments. But I’m still going to run experiments and do the analysis in Python, sorry not sorry.

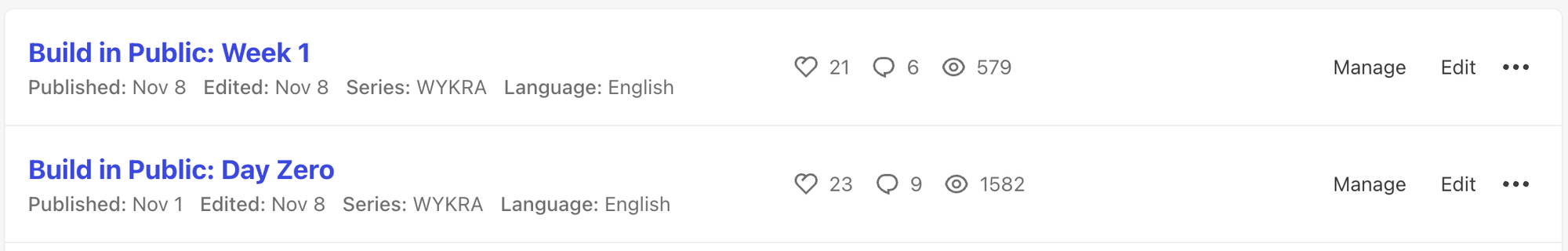

I also wanted to show the activity from the Build in Public posts so far Day Zero passed 1.5k views, Week 1 is close to 600 and together they brought a nice mix of comments and reactions.

In the same week my personal blog on datobra.com gained a shocking total of two new followers, which I honestly count as an achievement. In a world where 90% of the internet text is written by models, getting real humans to read anything feels harder and harder. These days it sometimes feels like blogs are mostly read by AI agents rather than actual humans, which I guess means that if AI ever replaces all of us, I’ll at least have a very loyal audience.

I also have Google Analytics on the blog, but so far it either updates painfully slowly or simply ignores traffic from Dev.to reposts. And Dev.to’s built-in stats don’t show much detail about where readers come from or what countries actually click. At some point I’ll need a proper analytics setup, but for now I’m mostly guessing. Maybe the answer is to pay for yet another tool - why stop now, right?

The part no one warns you about

When you start building something of your own, an open-source tool, a startup or even a simple blog, no one tells you that actual coding or writing will take maybe half of your time on a good week. The rest quietly turns into research, planning, positioning, marketing, brand decisions and the never-ending "wait, who is this actually for?" loop.

So this week we did the obvious-but-avoided thing: we found a real marketer who actually runs influencer campaigns, sat them down and asked the boring-but-critical questions - how do you search, what tools do you trust, what looks good, what looks suspicious and what’s a total time sink? Since we’re building an agent to help with this, we wanted the manual reality, not assumptions.

Spoiler: it’s way less automated than we expected and way more like detective work. Here’s what we learned.

What works better: paid ads or influencer marketing?

Both work, just for different things. Influencer posts perform better when the product is clear and straightforward. Paid ads feel more like throwing darts in the dark and hoping the algorithm shows mercy that day.

How do marketers classify influencers?

Mostly by follower count. The rough tiers are:

• Micro: <10K

• Mid: 10–50K

• Macro: 50–100K+

• Million-plus creators

Interestingly, collaborations below 100K often convert best because they are big enough to matter and still human enough to trust.

How do they actually find influencers?

• Instagram hashtag searches

• Aggregators like LiveDune or PopularMetrics

• Instagram Explore page

How do they check if an influencer is "real" or inflated?

• they look at how much post reach varies: identical numbers across posts are usually a sign something’s off

• they read the comments: they should make sense for the post, not be random emojis or sudden floods on a quiet post; and yes, "activity chats" where creators buy or trade comments are very much a thing

Is there a difference in how social platforms are used across countries?

It turns out platform popularity depends completely on where you are. In some countries Facebook feels like a museum piece, while in others it’s still the first place people go to look for creators. TikTok delivers plenty of views almost everywhere, but those views don’t always turn into purchases lots of attention, not a lot of buying and it's cheaper.

How do marketers discover new tools?

Often through trend-setters inside the marketing world, the people whose posts, reviews or casual mentions other marketers pay attention to. A tool shows up in a TikTok, a LinkedIn post, a newsletter or even a Google ad, gains a bit of momentum and suddenly everyone is checking it out.

What did we take from all this?

After talking through the whole process, two things became very clear:

- Influencer discovery is manual labor.

- Evaluating quality is even more manual.

Our working assumption now is that roughly 90% of this workflow can be automated or at least semi-automated without losing the human judgment that matters.

And about pricing…

If you’re curious about pricing for existing tools, it’s ambitious.

- basic tools start around $30–50/month

- mid-tier platforms for small/medium brands: $200–500/month

- enterprise influencer-marketing suites: $1,000+ per month, often custom-priced

So there’s definitely room for something more flexible and founder-friendly.

Looking ahead

By now it’s pretty clear that this project has two completely different brains living inside it. One is about finding influencers automatically. The other is about figuring out whether those influencers are actually any good.

This week we stayed on the discovery side, we tried a few automatic approaches for finding influencers and that will be the focus of the next chapter.

Weeks 3–4 will shift to the "are they real or are they just very committed to pretending?" part. I’ll put together a Jupyter notebook, pick one obviously fake influencer and one normal one and compare their stats side by side. With any luck, we’ll be able to see a clear, measurable difference, something that actually shows up in the data.

How Do We Actually Find Influencers Automatically?

During the challenge we already tried ChatGPT, Claude and Google Search through Bright Data’s MCP server. The results were inconsistent: outdated data, noisy links, and occasional hallucinated accounts.

Strictly speaking we need something that uses fresher data and can actually retrieve it. In practice this means either a tightly constrained Google search or a model that works online which is Perplexity.

This week we tried all of these options and added a Jupyter notebook where you can run them directly. Let’s look at the results.

1. Google → Instagram search (Bright Data Google SERP dataset)

This is the most literal approach: treat Google as a restricted Instagram search engine using queries like:site:instagram.com "sourdough" "NYC baker"

site:instagram.com "AI tools" OR "data engineer" OR "#buildinpublic"

site:instagram.com "indie maker" OR "solopreneur" "reels"

We did run into one issue here: some of the older Bright Data documentation is outdated. The Google SERP dataset has now fully moved under the Web Scraper API, so you no longer need to create a separate SERP API zone. You can call the dataset directly, pass the query and get the results in a single step.

The method is predictable if the query is specific enough, but still prone to SEO noise.

2. Google → Instagram search (Bright Data AI Mode Google dataset)

I also found that the Web Scraper Library now includes an AI-Google mode under the same interface, so it doesn’t require any separate configuration. You just pass a natural-language prompt and it generates the Google query for you.

"Find Instagram profiles of NYC sourdough bakers. Use site:instagram.com and return profile URLs only."

The output comes back as structured JSON. It works, but the results are noticeably softer than strict keyword queries, sometimes correct, sometimes a bit too creative.

3. Perplexity → Instagram search (Bright Data Web Scrapers Library)

Next we tried letting Perplexity handle the discovery directly inside the Web Scrapers Library. You provide a prompt and the scraper runs Perplexity’s browsing on top of it.

Example prompt: "Find Instagram profiles of NYC sourdough bakers.

Return 15 profile URLs only. Prefer individuals, not brands."

The output is cleaner and more focused than AI-Google Mode, but there’s no control over which Perplexity model is used or how it behaves.

4. Direct Perplexity call (OpenRouter)

This gives the same type of results as the Web Scrapers version but with full control over the model. You can choose the exact Perplexity model, adjust its parameters and force the output format you want.

While working on this method it came to mind that you don’t have to ask for creators directly. You can also do it in two steps:

Step A: ask Perplexity for relevant hashtags

"Give me 10 Instagram hashtags used by indie makers and AI builders in 2024–2025."

Step B: ask for creators under those hashtags

"For each hashtag, list up to 10 active creators (<100K followers) with real engagement."

We ended up turning the strongest variants into API endpoints inside Wykra, so you can try them directly via:

https://github.com/wykra-io/wykra-api

The full research notebook with outputs JSONs is here: https://github.com/wykra-io/wykra-api-python/blob/main/research/search.ipynb

You’re welcome to follow, leave a like or drop a star on the repo and if you have ideas or feedback, we’re always happy to hear them.